Wanda

A Simple and Effective Prunig Approach for LLMs

Introduction

Pruning is a critical technique for reducing the size and computational cost of Large Language Models (LLMs), but different pruning methods vary in how they balance efficiency and accuracy retention. Wanda (Pruning by Weights and Activations) is a simple, activation-aware pruning approach that improves upon traditional magnitude pruning while avoiding the computational burden of second-order pruning methods like SparseGPT. To understand its advantages, we can evaluate Wanda along four key aspects:

- Granularity: Wanda performs layer-wise unstructured pruning, meaning it removes individual weights rather than full neurons or heads. But, it also supports semi-structured pruning (e.g., 2:4, 4:8 sparsity, which is optimized for GPU acceleration).

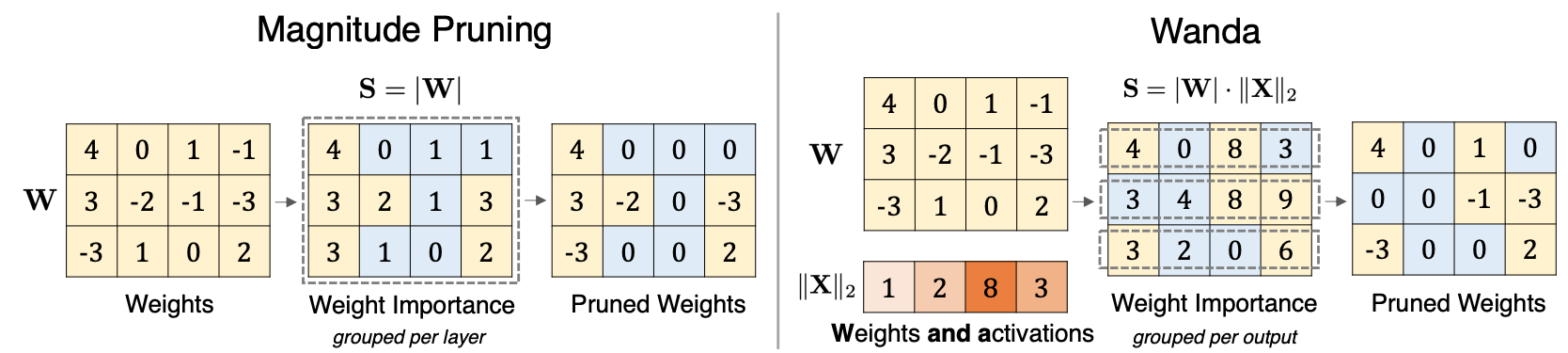

- Pruning Criteria: Wanda improves traditional magnitude pruning by incorporating activations into the importance metric. This ensures that weights connected to highly active neurons are preserved, preventing the removal of small but functionally important weights.

- Pruning Ratio: Wanda can be applied to various sparsity levels, supporting unstructured sparsity for accuracy retention and N:M sparsity for hardware acceleration.

- Need for Retraining: Wanda is a one-shot pruning method, which applies pruning without retraining or iterative weight reconstruction, making it much faster and easier to implement. This also makes Wanda practical for large-scale LLM deployments where retraining is infeasible.

Research Gap

So, why the traditional magnitude pruning fails for LLMs? Why do we need another solution?

LLMs are computationally expensive, making model compression techniques (quantization, pruning) essential. Pruning is a widely used technique that removes unimportant weights, reducing model size while maintaining performance. However, most existing pruning methods either:

- Require retraining, which is impractical for billion-scale models.

- Use expensive second-order weight updates, making pruning computationally costly. (A second-order weight update refers to a pruning method that uses second-order (Hessian) information to determine which weights to remove. This approach takes into account not only the magnitude of the weights but also their impact on the loss function, making it more precise than simple magnitude-based pruning.)

But the magnitude pruning in LLMs does not work. since it removes weights with the smallest absolute values but does not consider their role in the network. While effective for smaller models, it fails dramatically for LLMs due to the following reasons:

- Once LLMs reach a certain size (~6B+ parameters), some hidden state features have exceptionally large magnitudes. These outlier features are:

- 100× larger than typical activations.

- Sparse but highly influential for the model’s predictive performance.

- Removing them severely degrades LLM performance.

Since magnitude pruning ignores activations, it may remove small weights that are actually connected to these important high-magnitude features, causing major performance loss.

- Traditional deep networks (CNNs, small transformers) contain many redundant parameters. LLMs, however, are highly optimized, meaning their weights are more interdependent. Magnitude pruning disrupts critical pathways, making LLMs harder to prune effectively.

- The importance of a weight depends not only on its magnitude but also on the input activations. For example, a small-magnitude weight connected to a highly active neuron may be crucial, but magnitude pruning removes it anyway. LLMs exhibit high activation imbalance, making activation-aware pruning necessary.

- In OPT, LLaMA, and Pythia models, magnitude pruning fails catastrophically. Even at 10–20% sparsity, performance drops drastically. In some cases, pruned models completely fail (perplexity skyrockets).

Solution: Wanda

Wanda (Pruning by Weights and Activations) is a simple and effective method for pruning pretrained LLMs without retraining or weight updates. Instead of relying only on weight magnitude, it also considers input activations, leading to better sparse sub-networks. Here is how it works:

Step 1: Compute Input Activation Norms Using Calibration Data

- Since activations are input-dependent, Wanda estimates them using a small calibration set (e.g., 128 sequences from C4).

- A single forward pass is run through the model to obtain input activations (\(X\)).

- Compute the L2 norm of activations for each feature (\(j\)) across all input tokens. (Wanda operates at the layer level, meaning that \(j\) corresponds to the neurons (features) within a specific layer, and \(x_j\) represents the activations of those neurons across all tokens in the calibration dataset.) \(\|X_j\|_2 = \sqrt{\sum_{\text{tokens } i} X_{ij}^2}\)

- This norm represents the average activation strength for each input feature (neurons in each layer considering the input of that layer).

Step 2: Compute Weight Importance

Instead of pruning based on weight magnitude, Wanda computes an importance score for each weight:

\[S_{ij} = |W_{ij}| \cdot \|X_j\|_2\]where:

- \(\vert W_{ij} \vert\) is the absolute weight value.

- \(\|X_j\|_2\) is the L2 norm of the input activation (per layer).

The key idea here is that if a weight \(W_{ij}\) is small but is connected to a high-activation feature (\(X_j\)), it gets higher importance. This prevents the model from pruning weights connected to critical neurons.

Step 3: Prune the Lowest-Scoring Weights

Instead of pruning across the entire layer, Wanda prunes per output neuron. For each output neuron (\(i\)), Wanda:

- Sorts its weights by importance score (\(S_{ij}\)).

- Prunes the lowest-scoring weights based on the target sparsity level (\(s\%\)).

This preserves structural balance, preventing the collapse of important neurons.

Step 4: Use the Pruned Model Directly

Unlike SparseGPT, Wanda does not require weight updates or retraining. The pruned model is ready to use immediately.

Why Wanda works better than magnitude pruning:

- Considers Activations: Preserves weights linked to important features instead of just using magnitude.

- More Balanced Pruning: Prunes per output neuron, preventing over-pruning of critical layers.

- No Retraining or Weight Updates: Unlike SparseGPT, Wanda directly prunes weights with minimal computation.

- Fast and Scalable: Works with a single forward pass and is 300× faster than SparseGPT in pruning.

Conclusion

Wanda provides an efficient and scalable pruning approach for LLMs by introducing an activation-aware importance metric that significantly improves upon traditional magnitude pruning. By computing weight importance as the product of weight magnitude and activation norms, Wanda ensures that critical weights connected to active neurons are preserved, mitigating the primary failure points of existing pruning techniques. The layer-wise pruning process further enhances stability by ensuring that pruning decisions are applied locally within each layer, preventing excessive degradation of specific components of the network. Additionally, Wanda’s ability to be used directly after pruning, without the need for retraining or weight updates, makes it an attractive alternative to second-order pruning methods like SparseGPT. Its flexibility across different pruning paradigms—including unstructured, structured, and ratio-based pruning—demonstrates its adaptability to real-world deployment constraints. With its fast, one-shot pruning mechanism and competitive performance, Wanda represents an important step toward practical and scalable sparsity in LLMs, opening up new possibilities for efficient inference and deployment in resource-constrained environments.