Automatic Prompt Engineer

Large Language Models Are Human-Level Prompt Engineers

Recently, with the developed Large Langugae Models (LLM), it is common to view these LLMs as black-box and focus more on designing better prompts to be able to get a reasonable performance of these LLMs. Several forms of prompt engineering exist in the literature, including tuning differentiable soft prompts and tuning hard (natural language) prompts. The second approach, using hard prompts, is of significant interest due to its being understandable and human-friendly. However, a critical problem with this approach is that, to find a reasonable prompt, humans must repeat the experiments with a wide range of prompts to see which of them is the most compatible one for their languge model. Moreover, these prompts are not transferable to other LLMs, which means that this procedure should be repeated for working with each LLMs. So, the main focus of this paper is to provide an automatic approach for finding the best hard prompt without involving the human effort. Their solution is called Automatic Prompt Engineer (APE).

Let us say that a task is specified by a dataset \(D_{train} = \{ (Q, A) \}\) which is a set of input/output demonstrations. Considering \(M\) as a prompted languge model and \(\rho\) as a single instruction prompt, the input of \(M\) can be represented as: \([\rho;Q]\), the output of which is represented by \(A\). To measure how well \(M\) performs, we can use a function named \(f\) as the score function. This score function receives the prompt \(\rho\), the question \(Q\) as well as the answer \(A\), and then returns the numerical score value. Thus, we can represent the score function as: \(f(\rho, Q, A)\). The objective of APE is to find the best instruction prompts such that:

\[\rho^* = \arg\max_{\rho} f(\rho) = \arg\max_{\rho} E_{(Q,A)} [ f(\rho, Q, A) ]\]Now, the question is, how APE achieves this objective? APE is a three-step solution:

- Generating a set of candidate prompts: In this step, several generative language models (such as T5, GLM, and InsertGPT) are selected and fed with a set of \((Q,A)\) as samples. Then, the language model is asked to generate an instruction for the provided examples. These generated instructions are considered as the candidate prompts. To generate these candidate prompts, different templates for input are used: Forward Generation Template and Reverse Generation Template. The example is represented in the following image:

- Refining and filtering the candidate prompts: To verify how well can these candidate prompts perform, two metrics are used for scoring them:

- Execution Accuracy: A 0-1 loss function: \(f(\rho, Q, A) = 1 [M([\rho;A]) = A]\).

- Log Probability: \(log P(A|[\rho;Q])\) Each of the candidate prompts whose score functions are above a specific threshold will be selected in this steps.

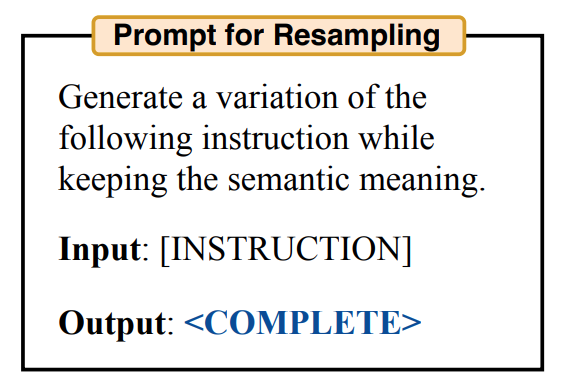

- Choosing the candidate with the highest score: This step is specifically useful when the filtered prompts are not enough! In this step, the filtered candidate prompts are passed to another LLM for generating a variation of the prompt. This step is like exploring the search space locally around the current best candidates. Among the generated variations, the ones with the highest score are selected as the final instruction prompt.