Byte Latent Transformer

Byte Latent Transformer: Patches Scale Better Than Tokens

Introduction

The Byte Latent Transformer (BLT) is a novel architecture that eliminates the need for traditional tokenization by directly processing raw bytes. This tokenizer-free approach allows the model to dynamically adapt to the complexity of the input data, making it robust across diverse domains and modalities. By leveraging entropy-based patching, adaptive compute allocation, and a combination of local and global attention mechanisms, BLT introduces a highly efficient and effective solution for sequence modeling tasks.

Research Gap

The research gaps addressed by this paper are as follows:

- Dependency on Tokenizers: Traditional token-based LLMs rely heavily on a pre-defined tokenizer, which determines how raw text is split into tokens. This introduces several issues:

- Domain Sensitivity: Tokenizers might not perform well across different domains or modalities because they are typically trained on a specific dataset.

- Input Noise Sensitivity: Tokenizers can be brittle to noisy inputs, like typos or uncommon character combinations.

- Multilingual Inequity: Fixed token vocabularies can be biased toward high-resource languages, disadvantaging low-resource or morphologically rich languages.

- Not Truly End-to-End: Tokenization adds a heuristic, pre-processing step that prevents LLMs from being purely end-to-end systems. This limits their flexibility and robustness.

- Compute Allocation Inefficiency: Token-based models allocate equal computational resources (attention and processing) to every token, even though not all tokens are equally complex or important. This rigid allocation can lead to inefficiencies, especially for simpler, predictable patterns in the data.

- For example: Predicting the first character of a new word or sentence often requires more computation than completing a common word (e.g., “M” in “Mozart” is harder to predict than “ozart”).

Solution: BLT

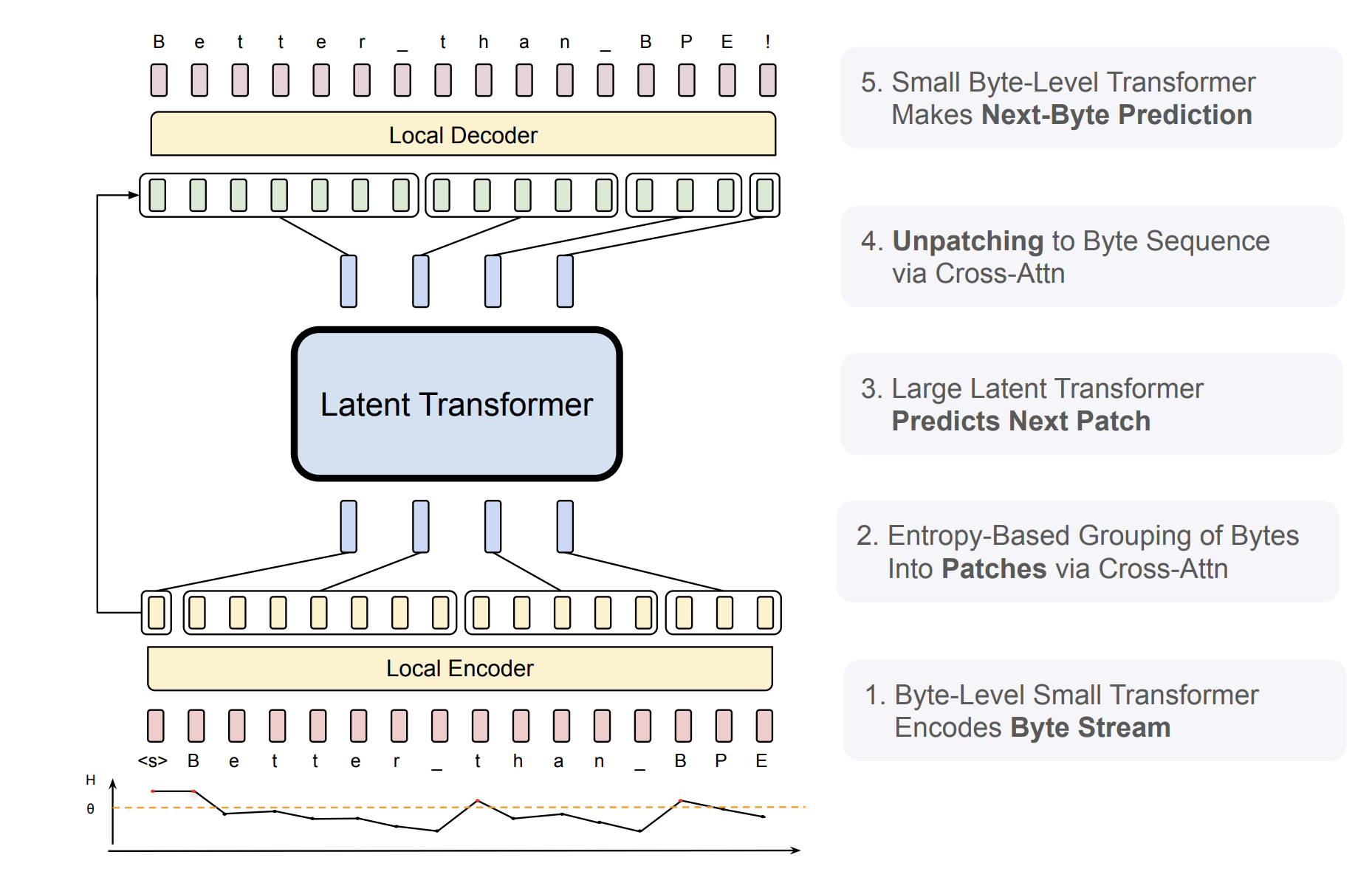

First, let us have a quick overview of the main steps in the architecture, which is depicted in the figure below:

- Patch Extraction: Raw text is split into patches using an entropy model.

- Patch Representation: Byte embeddings and hash n-gram embeddings are combined to form enriched representations for each patch.

- Encoder: Processes patch representations using multi-head self-attention and feed-forward layers.

- Latent Transformer: Captures global dependencies across patches using block-causal attention.

- Decoder: Autoregressively generates the output sequence, integrating context from the latent transformer and previously generated bytes.

Now, let us walk through each of these steps in detail.

Patch Extraction

The core idea revolves around patches instead of tokens, and their dynamic creation based on entropy is central to the Byte Latent Transformer (BLT) approach.

What Are Patches?

Patches are groups of consecutive bytes (e.g., characters) created dynamically instead of relying on pre-defined tokens. These patches serve as computation units, replacing traditional tokens.

Entropy and Its Role

Entropy measures the uncertainty or complexity of predicting the next byte in a sequence. High entropy indicates more unpredictable or complex data, while low entropy suggests predictable data. BLT uses entropy to decide where to split the sequence into patches:

- Global Threshold: If the entropy of the next byte exceeds a pre-set threshold, it marks the beginning of a new patch.

- Increasing Trend: If the entropy shows a sharp increase compared to the previous bytes, it signals a context shift and starts a new patch.

Why Use Entropy?

This approach ensures that compute is dynamically allocated:

- High-Entropy Regions: Complex parts (e.g., new words, context shifts) get more attention and computational resources.

- Low-Entropy Regions: Predictable data (e.g., spaces, repetitive patterns) is grouped together into longer patches, reducing computational overhead.

By avoiding rigid tokenization, BLT adapts its structure based on the data’s complexity.

Illustrative Example

For a sentence like I go to work., here’s how patch extraction works step by step:

- Input: Raw Bytes The sentence is represented as a sequence of bytes (ASCII/UTF-8 encoded):

"I go to work." → [73, 32, 103, 111, 32, 116, 111, 32, 119, 111, 114, 107, 46] - Modeling Byte Probabilities A small byte-level language model estimates the probability of each byte given the preceding bytes.

- Probability of

73 (I)given no prior context. - Probability of

32 (space)givenI. - Probability of

103 (g)givenIandspace, and so on.

- Probability of

- Entropy Calculation Entropy measures the uncertainty in predicting each byte. For a byte \(x_i\), the entropy \(H(x_i)\) is calculated as: \(H(x_i) = - \sum_{v \in V} p(v \vert x_{<i}) \log p(v \vert x_{<i})\), where:

- \(V\): The byte vocabulary (all possible byte values, e.g., 0–255 for UTF-8).

- \(p(v \vert x_{<I})\): The probability of byte \(v\) given the previous bytes \(x_{<I}\).

- Detecting Patch Boundaries Using the entropy values \(H(x_i)\), the model identifies boundaries for patches based on:

- A global threshold (e.g., entropy > threshold marks a new patch).

- A trend check, where entropy sharply increases compared to previous values.

Resulting Patches for I go to work.:

- Patch 1:

I go. - Patch 2:

to. - Patch 3:

work..

Patch Representation

Once patches are created, they are converted into patch representations through the following steps:

Byte Embeddings

Each byte in the patch is mapped to a high-dimensional vector using a learned embedding matrix. For example, in Patch 1 (I go):

- Bytes:

I, space (),g,o - Byte embeddings: Each byte is independently mapped to a vector.

Adding Context with Hash n-Gram Embeddings

To enrich local context, n-grams (sequences of consecutive bytes) are used. For example, in Patch 1 (I go):

- 2-grams: [

I, g,go] - 3-grams: [

I g, go]

Each n-gram is hashed into a fixed-size hash table, and the corresponding embedding is retrieved and added to the byte embedding. This provides local context for each byte.

Training vs. Inference

- Training: The hash table is randomly initialized and updated during training using backpropagation.

- Inference: The trained hash table is used to retrieve embeddings for new input on-the-fly.

Encoder

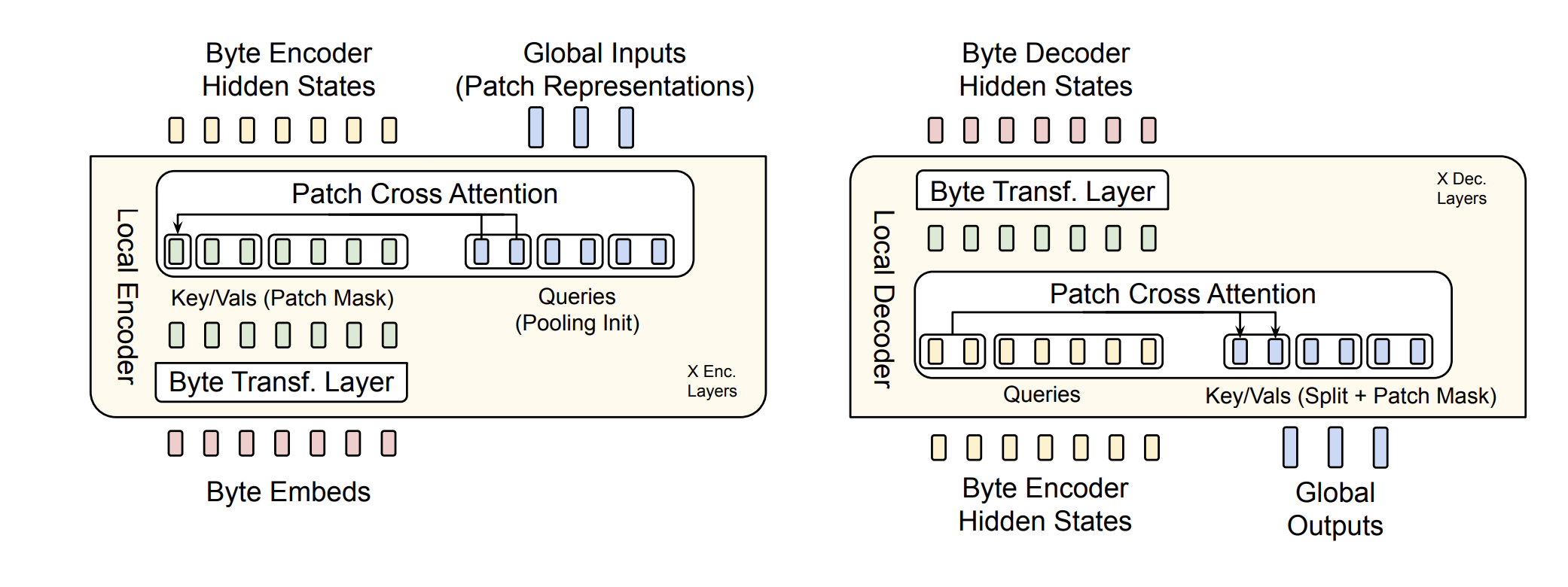

The patch representations are then fed into the encoder, which processes them further to capture dependencies across patches. This is how it works:

- Multi-Head Self-Attention: Similar to BERT, the encoder uses key, query, and value matrices to compute attention. However, instead of tokens, BLT’s encoder operates on patch representations.

- Feed-Forward Layers: Stacked layers of feed-forward networks refine the patch representations.

This allows the encoder to model relationships between patches rather than individual tokens.

Latent Transformer

The latent transformer is a global component in BLT that processes the patch representations to model long-range dependencies across patches. The key features are:

- Block-Causal Attention: Ensures autoregressive behavior, where each patch can only attend to itself and preceding patches (not future ones). For example:

- Patch 1 (

I go) attends only to itself. - Patch 2 (

to) attends to Patch 1 and itself.

- Patch 1 (

- Purpose: Captures global context across the sequence and allocates compute dynamically based on patch complexity.

Decoder

The decoder generates the final sequence of bytes autoregressively, based on the latent representations from the latent transformer. This is how it works:

- Cross-Attention: Combines information from the latent transformer (patch-level context) and previously generated bytes.

- Query: Byte representations from the decoder.

- Key/Value: Patch representations from the latent transformer.

- Byte-by-Byte Generation: The decoder predicts one byte at a time until the entire sequence is generated.

Conclusion

So, by the proposed solution, the research gaps mentioned earlier are addressed as follows:

- Dependency on Tokenizers: BLT processes raw bytes directly, eliminating the need for a tokenizer and the associated domain, noise, and multilingual biases.

- Not Truly End-to-End: BLT integrates the entire learning process into the model, removing the pre-processing step of tokenization, making the system fully end-to-end.

- Compute Allocation Inefficiency: BLT dynamically groups bytes into patches based on entropy, allocating more compute to complex regions and less to predictable ones, improving efficiency and performance.