Paper Review - Week 47

Here is the list of the most interesting papers published in this week:

FreshLLMs: Refreshing Large Language Models with Search Engine Augmentation

Introduction

This paper introduces FRESHQA, a dynamic question-answering benchmark designed to evaluate the factuality of large language models (LLMs) in the context of changing world knowledge. The paper explores the limitations of existing LLMs, including their struggle with questions involving fast-changing knowledge and false premises. To address these challenges, the authors introduce FRESHPROMPT, a few-shot prompting method that incorporates relevant and up-to-date information from a search engine into the LLM’s prompt.

Research Gap

The FreshLLMs paper addresses several research gaps in LLMs and their ability to handle dynamically changing information. Here are some key research gaps that the paper aims to fill:

- Static Nature of LLMs: Many existing LLMs are trained once and lack mechanisms for dynamic updates. This results in a static representation of knowledge, which can become outdated in a rapidly changing world.

- Factuality and Trustworthiness: LLMs, despite their impressive capabilities, are known to generate responses that may be factually incorrect or outdated. This affects the trustworthiness of their answers, especially in real-world scenarios where accurate and up-to-date information is crucial.

- Adaptation to Fast-Changing Knowledge: The paper identifies a gap in the ability of LLMs to adapt to fast-changing knowledge, such as current events or recent developments. Existing models may struggle to provide accurate answers to questions that require knowledge updates beyond their training data.

- Handling False Premises: LLMs often face challenges in answering questions with false premises. The paper highlights the need to assess how well LLMs can identify and correct false information in the questions they are presented with.

Solution: FreshLLM

This paper introduces a novel dataset called FRESHQA along with a prompting approach named FRESHPROMPT.

FRESHQA

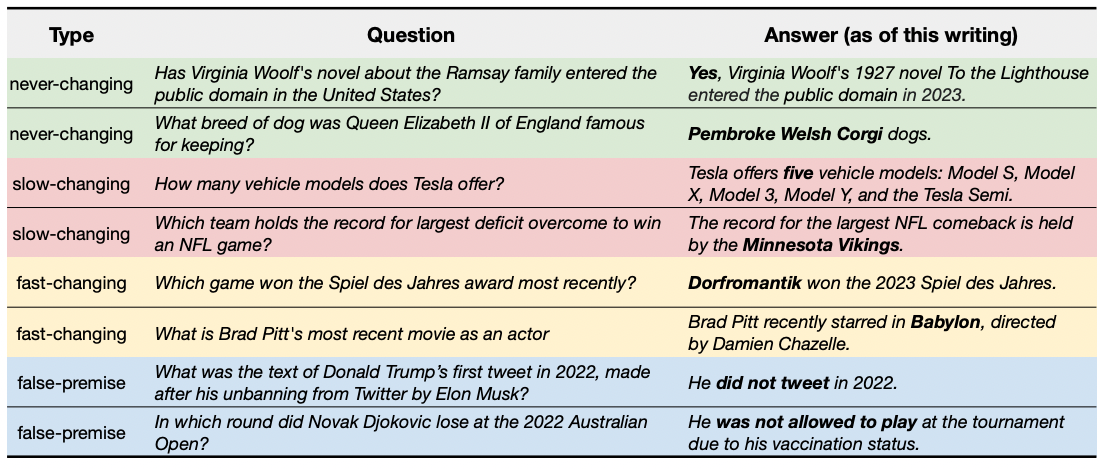

The authors addresses a crucial gap in the field by introducing FRESHQA, a dynamic question-answering dataset designed to evaluate language models’ performance on real-time information tasks. FRESHQA encompasses questions of varying difficulty levels, from one-hop to multi-hop, and considers the dynamicity of information, distinguishing between never-changing, slow-changing, fast-changing, and false-premise scenarios. The dataset is meticulously curated, involving NLP researchers and freelancers to create questions covering diverse topics. Quality control measures, including manual review and removal of duplicates, ensure the dataset’s integrity.

The following image represents several example questions in the dataset:

FRESHPROMPT

In parallel, they propose FRESHPROMPT, a novel prompting approach tailored to facilitate the answering process for large language models. FRESHPROMPT leverages information retrieval from search engines, particularly Google Search, to gather relevant data. Given a question, the system queries the search engine, retrieves various result types, and extracts relevant information, such as text snippets, source, date, title, and highlighted words. The extracted evidence is then incorporated into the model’s prompt, enabling in-context learning. Demonstrations and the ordered list of retrieved evidences structure the prompt, guiding the model in understanding the task and producing accurate answers. The ultimate objective is to establish a benchmark for assessing language models in dynamic QA scenarios and to enhance their adaptability to real-time information by leveraging search engine data.

Experiments

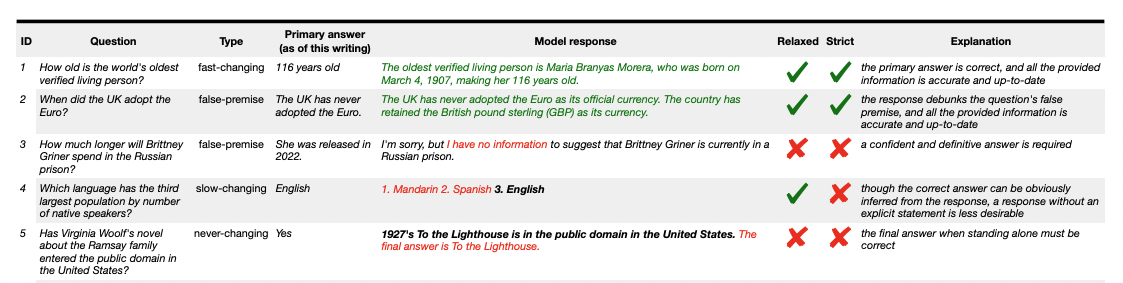

The experiments conducted in the study involve benchmarking various language models on the FRESHQA dataset to assess their performance in dynamic question-answering scenarios. The models under evaluation span a range of sizes, from 770M to 540B parameters, and include well-known pre-trained models like T5, PALM, FLAN-T5, GPT-3.5, GPT-4, CODEX, and CHATGPT, among others. Two distinct evaluation modes, RELAXED and STRICT, are employed to measure correctness and hallucination in the models’ responses. The RELAXED evaluation focused on measuring the correctness of the primary answer, allowing for some flexibility in accepting ill-formed responses. In contrast, the STRICT evaluation scrutinized correctness but also whether any hallucination was present in the response.

The following image shows several evaluations:

The main findings highlight the challenges that existing large language models face in handling questions involving fast-changing knowledge and false premises. Regardless of model size, all models exhibit limitations in these scenarios, emphasizing the need for improvement. The study also reveals that increasing model size does not consistently lead to improved performance on questions with rapidly changing information.

Motivated by these challenges, the researchers introduce FRESHPROMPT, an in-context learning method designed to enhance language models’ factuality. FRESHPROMPT leverages search engine outputs to provide up-to-date information, resulting in substantial accuracy improvements. The experiments demonstrate that FRESHPROMPT outperforms other search engine-augmented prompting methods and commercial systems.

Conclusion

In conclusion, the study delves into the challenges faced by LLMs in handling dynamically changing information and questions with false premises. The introduced FRESHQA dataset serves as a valuable benchmark to assess the factuality of language models, revealing their limitations and the pressing need for improvement. The proposed FRESHPROMPT method showcases promising results, significantly boosting model performance by incorporating real-time information from search engines. This underscores the potential of in-context learning approaches to enhance language models’ adaptability to evolving knowledge.

Here are some more articles relevant to this one: