Paper Review - Week 35

Here is the list of the most interesting papers published in this week:

RecMind: Large Language Model Powered Agent For Recommendation

Introduction

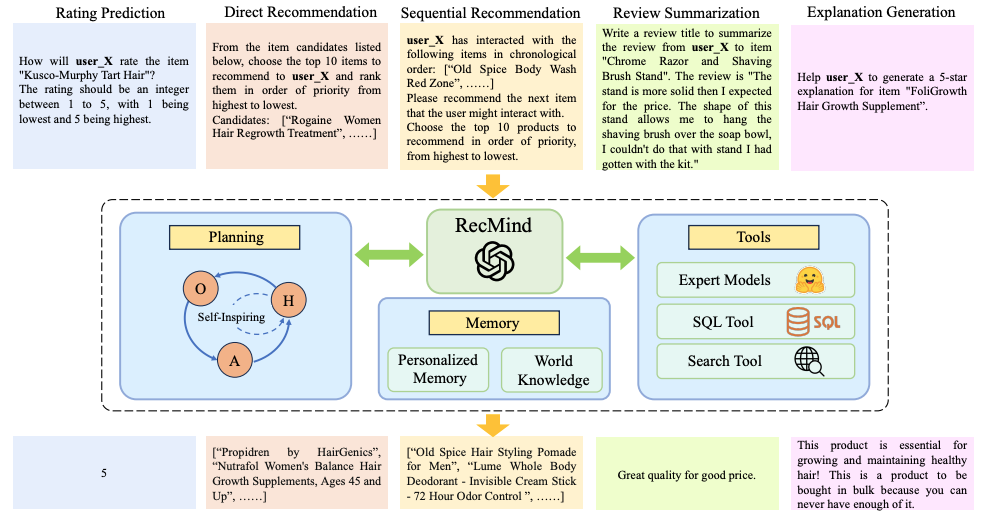

The paper is introducing a novel recommendation system called RecMind that utilizes Large Language Models (LLMs) as its core component. The system integrates various tools, such as a database tool for accessing domain-specific knowledge, a search tool for real-time information, and a text summarization tool. RecMind also employs a planning mechanism that breaks down complex recommendation tasks into smaller, manageable sub-goals. One of the key highlights of the paper is the introduction of the Self-Inspiring (SI) planning algorithm, which enables the system to consider all previous planning paths at each intermediate step, leading to better decision-making and recommendations.

Research Gap

This paper focuses of addressing the following research gaps:

-

Limited Use of External Knowledge: Most existing recommendation systems often struggle to effectively utilize external knowledge due to constraints in model scale and data size. RecMind includes a database tool, a search tool, and a text summarization tool. These tools enable RecMind to access external knowledge beyond what is inherently present in the LLM’s parameters.

-

Lack of Generalizability: Current recommendation methods are often designed for specific tasks and struggle to generalize to unseen recommendation scenarios.RecMind incorporates a planning mechanism that breaks down complex tasks into smaller, manageable sub-goals. This planning approach enables RecMind to efficiently handle diverse recommendation scenarios, thereby enhancing its generalizability across various tasks.

-

Underutilization of Reasoning Capabilities: The reasoning capabilities of LLMs are not fully leveraged in existing research, leading to suboptimal performance in complex recommendation tasks.RecMind proposes the Self-Inspiring (SI) planning algorithm, which allows RecMind to consider all previous planning paths at each intermediate step. By retaining and utilizing historical planning information, RecMind’s SI algorithm significantly enhances the model’s ability to comprehend complex recommendation tasks and make better-informed decisions.

Solution: RecMind

RecMind is composed of three components: Planning Component, Memory Component, and Tools. Let us have a brief overview of each of these components:

- Planning Component: The planning component is pivotal in breaking down the entirety of the recommendation task into more manageable sub-goals. This process is facilitated by the implementation of the Self-Inspiring (SI) algorithm, which enables the system to iteratively plan and execute tasks by considering previous states.

- Memory Component: The memory component is divided into two categories: Personalized Memory and World Knowledge. Personalized Memory encompasses the historical interactions of the user, thereby enabling RecMind to understand individual preferences and behaviors. On the other hand, World Knowledge encompasses a broader spectrum, incorporating metadata about items as well as real-time data sourced from the web. This collective memory framework empowers RecMind with a profound understanding of user preferences and item characteristics.

- Tools: The tools component plays a crucial role in providing the necessary data for the World Knowledge segment. This component can access various tools, including Database Tools, Search Tools, and Text Summarization Tool. These tools serve the purpose of enriching RecMind’s knowledge base, allowing it to efficiently acquire external information and enhance the depth and precision of its recommendations.

Here is a brief overview of the architecture of RecMind:

Self-Inpiring (SI) Algorithm

This algorithm is inspired by the Tree-of-Thoughts approach. So, let us first define SI: The SI algorithm is a novel planning technique that allows the system to consider all previous planning paths at each intermediate step. It generates better planning by integrating multiple reasoning paths, retaining all previous states from the history of paths when generating a new state. SI focuses on utilizing all historical states to provide more comprehensive and informed decision-making, thereby improving the overall planning ability of RecMind.

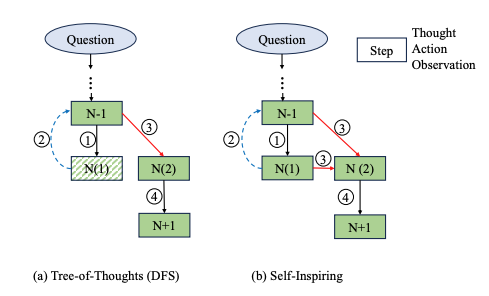

To compare SI with ToT, both methods fundamentally involve the exploration of a tree of possible paths or thoughts. However, the crucial distinction lies in the approach to traversal within this tree.

In the ToT method, various traversal strategies like BFS or DFS are used to navigate the tree, enabling the consideration of different paths but possibly leading to the exclusion of certain historical states or paths based on the selected search strategy.

Conversely, the SI algorithm conducts an exhaustive search over the entire tree, ensuring that all historical states and paths are thoroughly explored and considered during the recommendation process. This exhaustive search approach in SI aims to leverage all available historical information, leading to a more comprehensive decision-making process.

Therefore, while both approaches involve navigating a tree of potential paths, the distinction lies in the traversal methodology, with ToT employing various traversal strategies and SI conducting a comprehensive exploration of the entire tree.

A comparison of SI and ToT is depicted in the following figure:

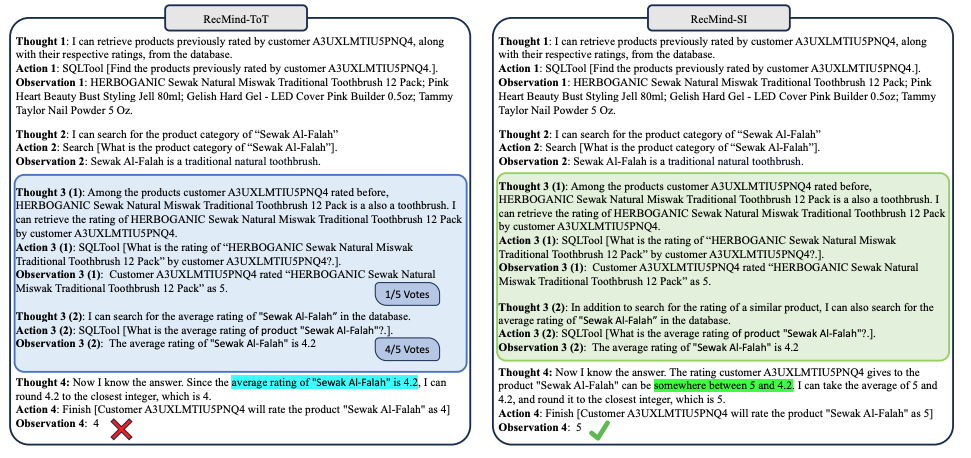

Also, an example scenario for prompting in each of these approaches is mentioned in the following figure:

Experiments

The experiments conducted in the paper aimed to evaluate the performance of the proposed recommendation system, RecMind, in various recommendation tasks: sequential recommendation, point-wise, list-wise tasks . The primary aspects of the experimental setup are outlined below:

The experimental setup involved the use of three popular datasets sourced from Amazon, namely Sports & Outdoors, Beauty, and Toys & Games, each containing specific user IDs, item IDs, ratings, textual reviews, and timestamps. The datasets were divided into training, validation, and testing sets.

The evaluation metrics utilized were Hit Ratio (HR), Normalized Discounted Cumulative Gain (NDCG) for sequential and top-N recommendation, and BLEU and ROUGE for generated explanations. Also, the core LLM used in RecMind is GPT3.5.

The performance of RecMind was compared with multiple baselines, including P5, ChatGPT (zero-shot and few-shot), and RecMind-CoT, as well as RecMind-ToT with BFS and DFS. Additional baselines were considered for each task to ensure comprehensive evaluation.

Conclusion

In conclusion, this paper introduces RecMind, a comprehensive recommendation system that leverages the capabilities of the large language model GPT-3.5. By incorporating a Planning component that decomposes the recommendation task into manageable subgoals using the Self-Inspiring (SI) algorithm, and a Memory component that comprises both Personalized Memory and World Knowledge, RecMind demonstrates a robust understanding of user-item interactions. The utilization of various Tools, including Database Tools, Search Tools, and a Text Summarization Tool, enables RecMind to access and process the necessary data from diverse sources. Through extensive experiments on diverse recommendation tasks, including sequential recommendation, point-wise, list-wise recommendation, explanation generation, and review summarization, RecMind’s performance is compared with several baselines, showcasing its effectiveness in providing precise and explainable recommendations across various scenarios. The results demonstrate the potential of RecMind in addressing the challenges of recommendation systems and improving user satisfaction and engagement.

Here are some more articles relevant to this one: